Rails 5 - Much Faster Collection Rendering

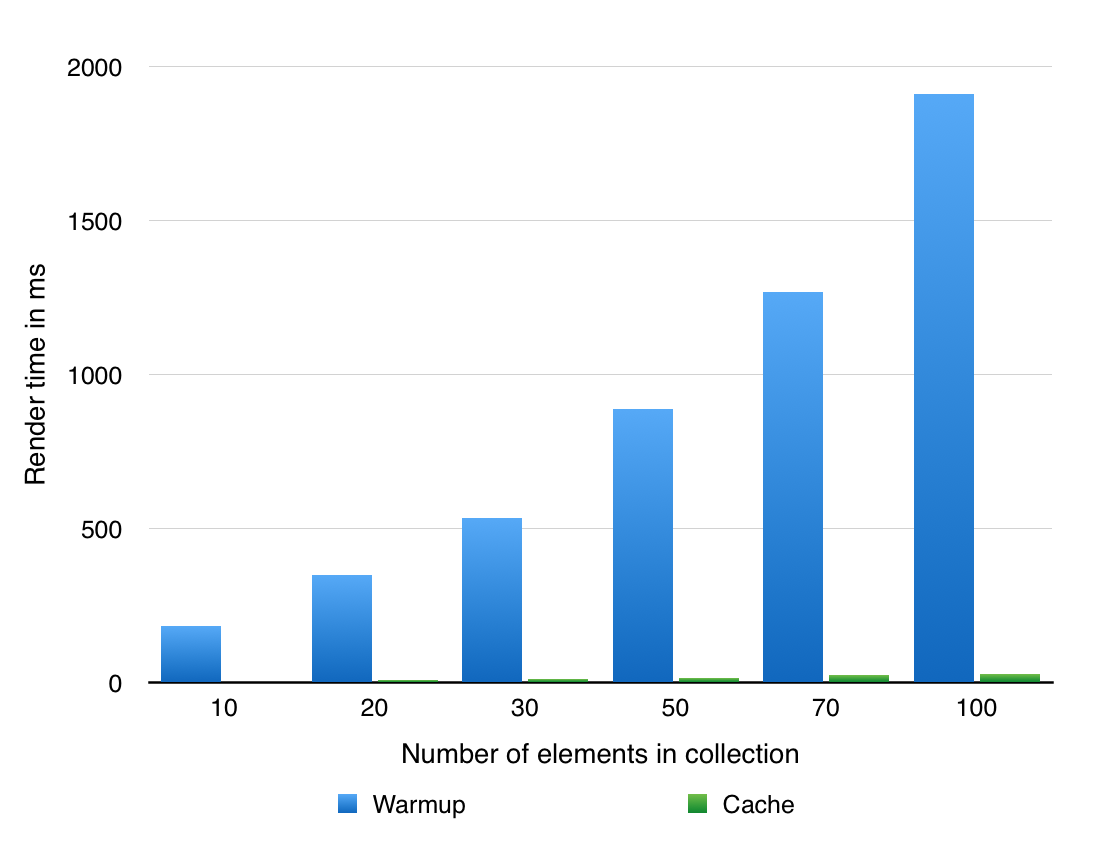

For Rails 5 we’re massively speeding up rendering collections of cached partials. Check this out:

That’s for a template with a simulated render time of at least 30 ms using the Active Support file cache store. On average the cached renders were 55 times faster.

It started simple enough. A plea for integrating a gem into Rails. By merging the multi_fetch_fragments gem, we could do <%= render @notifications, cache: true %> and batch fetch from the partial cache. This would be much faster than the current way of issuing a request to the caching harness for every template.

It has rosy numbers similar to my simulated benchmark, where the response time went from ~700ms to ~50ms on a page with 25 items. There’s more examples on the projects readme.

It wasn’t enough for DHH to just have the gem merged. He brought the stakes up another notch. It would be great if these things were just automatic when the individual template started with a cache call.

It took me a while to understand what the hell David even meant by automatic here. The point is if the template a collection renders starts with a cache call, like this:

<%= cache @notification do %> <%# notification shindigs %> <% end %>

Then rendering the collection should read several cached partials at a time:

Caches multi read: - views/notifications/1-20150504154024703289000/7f95da08e86e00fbd3e1e6d65720e1bd - views/notifications/2-20150504154024707736000/7f95da08e86e00fbd3e1e6d65720e1bd - views/notifications/3-20150504154024709532000/7f95da08e86e00fbd3e1e6d65720e1bd # …

When rendered like:

<%= render @notifications %> <%= render partial: 'notifications/notification', collection: @notifications, as: :notification %>

Those cache calls will generate a cache key to store the rendered template under. To make a bulk lookup we need to know how to generate those same cache keys. That way we can get back the contents.

Vault and Keys

Whenever you call cache in a template Rails will automatically look for render calls inside the template. Those inner render calls declare a dependency on those inner templates. But how does Rails keep track of the dependencies? We wouldn’t that from just a regular cache key. The good thing is Rails doesn’t generate any old cache key. The generated key will include a digest of the dependencies.

That’s heady, so here’s an example:

# bugs/_bunny.html.erb <% cache bunny do %> <%= render bunny.carrots %> <% end %> # carrots/_carrot.html.erb <%# Hey, I’m a dependency %> <%= carrot.name %>

See, there’s a dependency between a bunny and its carrots. The carrot template even starts with a nice comment, a kind of ”What’s up”-doc.

Now Rails knows that bunny’s cache key will need to include a digest of _carrot.html.erb. You might think Rails would need to do something speciel because bunny is rendering a carrot collection. But that doesn’t matter, it’s only the templates Rails cares about.

This setup would generate logs like so:

# First render Write fragment views/bunnies/1-20150518114325852668000/45a210f34898e8c2e5c8f77b297640f5 (7.6ms) # Subsequent renders Read fragment views/bunnies/1-20150518114325852668000/45a210f34898e8c2e5c8f77b297640f5 (0.2ms)

Woah, those next renders are cashing in some milliseconds. Note those fragments can be nested in other caches, that’s why they’re called fragments in the logs.

The cache key itself is composed like: views/ plural class name / id - updated_at / template tree digest. This way there’ll be no fragment to read if the record’s been updated, so the harness will render it again with a new cache key. But what happens if a dependency changes? That’s where the mystical template digest comes in.

Finding the digest of a template is like extracting its DNA - a unique way to automatically id it. This data structure gives us a foundation where we can extrapolate some knowledge about our code. These things we know to be true now: A. If two digests are the same then they represent the same content. B. If a digest has changed its template has changed too. C. If a template changes its cache key has changed too. D. Any other template which renders a changed template has its cache key changed too.

A lets us id and find the cached partials again while B and C lets us bust the cache at the right time. D. ensures cache busting bubbles up, like this:

# _template_with_dependency.html.erb <% cache @kitten do %> <%= render 'kitten' %> <% end %> # _kitten.html.erb SOFT KITTEN

Our first template has a dependency on our kitten template, so it’s cache key will include a digest of the kitten template. Then if we change the kitten template:

# _kitten.html.erb SOFT KITTEN SLEEPING ON BLANKET

The first template will generate a different cache key than before. This way it won’t be found in our cache and it’s rendered again. Russian doll caching gets to your head quickly, so don’t worry if it doesn’t feel like ”Nostrovia” time just yet.

The thing to keep in mind here is if we can generate the same cache key, then we can read back from the cache. But if the template isn’t cached we need to render it and write the output to the cache. For that we need to know if it’s okay for the collection add the template to the cache. We need to know if the template is eligible.

Eligible Templates

To take part in this a collection’s rendered templates need to follow a cache convention. Take a look at these different versions of notifications/_notification.html.erb:

# We expect a cache call on the first line, # and we expect a notification partial to cache a notification: <% cache notification do %> # But caching anything else wouldn’t bust the cache right: <% cache notification.event do %> # On the other hand, you might want to use a different variable name: <% cache watch_notification do %> # And it would fast render just fine with: <%= render @notifications, as: 'watch_notification' %> # Starting the partial with a comment works too: <%# I don’t screw anything up %> <% cache notification do %> # Heck, you can call cache like a crazy person! <% cache notification do %> # And your old pals, the parentheses, are here too: <% cache(notification) do %>

If you were reading that section and thought ”Hey, that’s /\A(?:<%#.*%>\n?)?<% cache\(?\s*(\w+\.?)/”, you probably know your way around Regexes too well. Please seek help - find that AMA you use to substitute real therapy.

This support is not exclusive to ERB templates. Any other handler can take part in this by implementing resource_cache_call_pattern. Now if you’re feeling that contributors’ trigger finger, submit a pull request to Haml or Slim.

Rounding it up

If you render a collection like:

<%= render @notifications %> <%= render partial: 'notifications/notification', collection: @notifications, as: :notification %>

And the notification partial is eligible, we’ll automatically issue a single read for all of those partials that are cached. The partials that aren’t cached are rendered and cached, so the next time the collection renders there’ll be even more templates to read from the cache at once.

All you have to do is write eligible templates and your rendering times will plummet. That’s how we’re making collection rendering faster in Rails 5.

Take a look at the implementation in the original pull request: https://github.com/rails/rails/pull/18948. You can also try comparing my revised code against the multi_fetch_fragments gem’s original implementation. Once you’ve compared those, look at the second commit to see how we made it automatic.

You can also go behind the words and see the benchmark above’s code.